The microscale computers will speed the processing of volumes of image data generated by autonomous vehicles, robots, and other devices that “see.”

There are, these days, speed-intensive computing tasks in which the time needed to transmit electrons between components — events that happen at nearly the speed of light — and the energy required to transmit that data are a serious drag on computing performance. The field is known as edge computing because each component is situated as close to the next as possible, quite literally on the “edge” of one another, to reduce transfer time and energy demand.

Toward that goal, researchers at SRI, under a contract from the Defense Advanced Research Projects Agency (DARPA), have produced a next-generation approach known as in-pixel image processing — or IP2 for short — that will help image-based, data-intensive applications accelerate computation while using less energy to do it.

“We have simulated super-small computer processors that can be squeezed between the pixels of an image sensor allowing us to process the data in the sensor itself,” explains David Zhang, senior technical manager in the Vision Systems Lab at SRI’s Center for Vision Technologies, principal investigator on IP2. “Every four pixels in the imaging grid is surrounded by its own processor.”

High-risk applications

Such data-intensive applications are common in self-driving vehicles, for instance, where the amount of data collected, and the relative importance of rapid processing are extremely high — lives are at stake. In such cameras, the current image sensors capturing light and the chips processing data are already sandwiched together back-to-back. And yet, even that close proximity is not enough. Computer vision engineers, Zhang chief among them, are constantly searching for ways to speed those lightning transactions.

IP2 is the next step in that evolution, bringing processing to the pixels themselves. That proximity, Zhang says, will allow the computer to make milliseconds decisions about the value of the data they are about to transmit, separating important pixels from less important ones based on the task to reduce the amount of data to be processed in the chip next to it.

The central technical principle is for the sensor capturing photonic signals to make a decision based on the predictive mask generated from the previous frame. The mask determines which pixels (e.g., objects in the image) are meaningful, and which are not. That salient data is then sent to the edge processor directly behind the image sensor that runs anticipatory control AI models.

Separating this visual wheat from the chaff, Zhang says, allows the image sensors and processors to operate much more data and energy efficiently. In simulations, IP2 achieves ten-times reduction in bandwidth and more than ten-times power and speed improvements.

“IP2 provides a novel way for image sensors to know where to look, what to look at, and how to look.” – David Zhang

“Essentially, we put logic gates around the pixels. The sensor itself becomes an AI inferencing engine helping us select which data is important and which data is less so to save time and energy yet achieve the same level of intelligent performance in these high-sensitivity applications demand,” Zhang says.

Where rubber meets road

He explains with a hypothetical self-driving car. One can imagine in these applications the risk of getting something wrong, he says. There is little if any margin for error. Nonetheless, Zhang explains, the data demands are so significant that the reward for being able to achieve exceptional results at faster speeds using less energy are equally high.

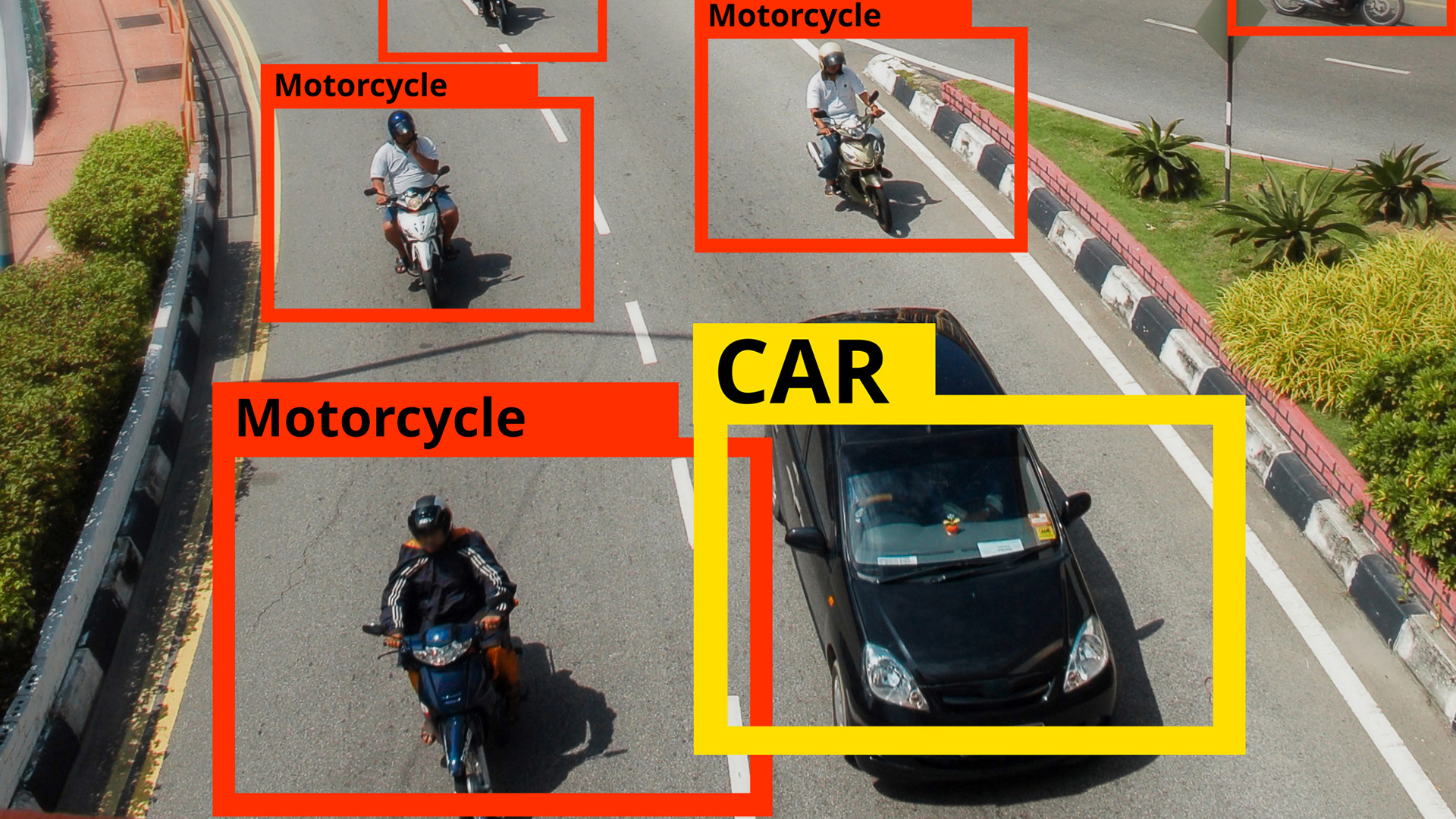

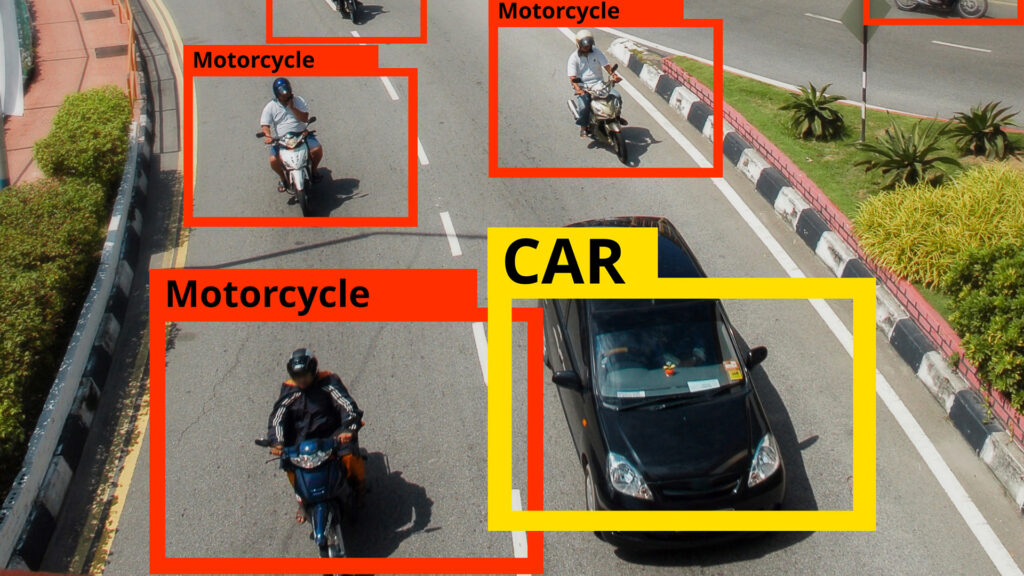

Zhang says to imagine a fixed camera in a car moving through down a busy city street. The car sees the street ahead. It sees other vehicles. It sees pedestrians and bicyclists. It sees intersections. But it also sees the hood of the car, the blue sky above, the trees and buildings, and the roofs of nearby houses.

“This irrelevant information to the car, the driver and the other people nearby. IP2 help us decide which to take seriously and which we can de-prioritize to ease our processing demands,” Zhang says.

Zhang then describes another way IP2 helps reduce processing and energy demand through improved object prediction. For detected objects, either moving or stationary, their trajectories are calculated and predicted with respect to the sensor. When confidence is high, IP2 does not need to detect and process their identity and position at every frame. Those resources can instead be used to explore information that needs more attention or unknown regions to capture anomalies.

Eye of the beholder

Imitating the biology of the human eye, the technique is known as “saccadic mechanism.” IP2’s saccadic behavior scales up processing large number of objects in a crowded scene without slowing down the processing. The in-pixel processing only needs to pay attention to the new and important signals while the back-end processing manages the trajectories of all known objects and predict masks for the future explorations.

What remains, thanks to in-pixel image processing, is only the most critical data the car needs to know — other vehicles, pedestrians, bicyclists, animals, objects to be temporarily de-prioritized, and so forth.

“IP2 provides a novel way for image sensors to know where to look, what to look at, and how to look. It is a new dimension in data communication such that bandwidth can be dynamically adjusted without delaying critical information delivery for real-time decision making,” Zhang ends. “Bottom line: The computers are adapting in real-time based on the communication cost of the data. It’s a cool technology.”

This research was, in part, funded by by the Defense Advanced Research Projects Agency (DARPA) under Agreement No. HR00112190119. The views and conclusions contained in this document are those of the authors and should not be interpreted as representing the official policies, either expressed or implied, of the U.S. Government.

Distribution Statement ‘A’ (Approved for Public Release, Distribution Unlimited)