In the age of disinformation, SRI helps develop cutting-edge AI tools that can detect manipulated news.

The tools of modern media are now so powerful that bad actors can synthesize entire news stories from thin air, including text, audio, images, and video, all with the sheen of credibility. Known as manipulated media, such stories are created to deceive for political, reputational, or financial gain. Far from benign, disinformation can threaten national security, and identifying manipulated media has taken on greater urgency.

A group of AI experts from SRI and collaborators at the University of Maryland, the University of Washington, and the University at Buffalo received a $10.9 million grant from the Defense Advanced Research Projects Agency (DARPA) to create a media analysis tool to detect manipulated media. The initiative, known as SemaFor—a portmanteau of “semantic forensics”—uses AI to analyze text, images, video, and audio to identify the subtle but significant clues that separate the real from the manipulated.

Defeating disinformation

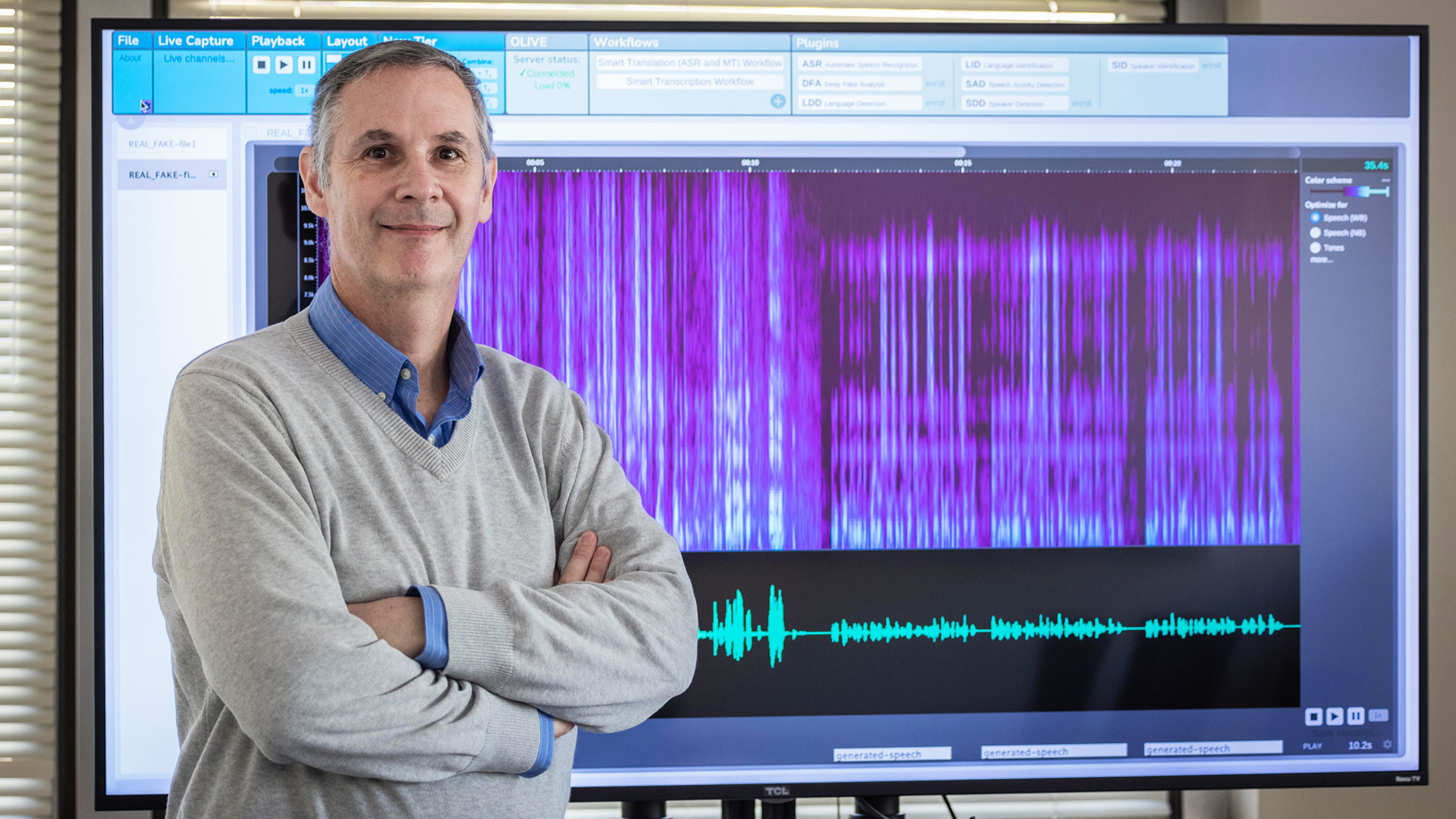

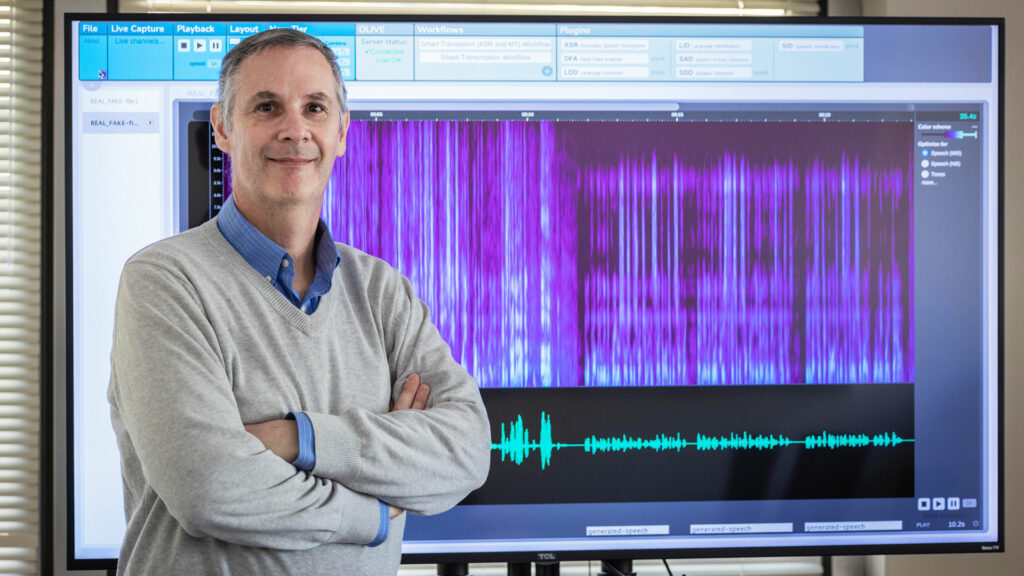

“Our team’s main goal is to build tools to defend against large-scale, online, automated disinformation attacks that aim to intentionally spread false information,” said Martin Graciarena, SRI’s principal investigator on the SemaFor project and senior manager of computer science at SRI’s Speech Technology and Research (STAR) Lab. “We are developing technologies that automatically detect, attribute, and characterize falsified multimedia stories circulating online to defend against large-scale disinformation attacks.”

“When someone wants to plant a falsified news story, the tools to create them are very good, but it’s still challenging to get every nuance of the language, attribution, imagery, video, and audio just right,” Graciarena says. “Our system can look at all these aspects in total to discern when something is ever-so-slightly amiss. When taken as a whole, these clues can tell us when a news story has been manipulated.”

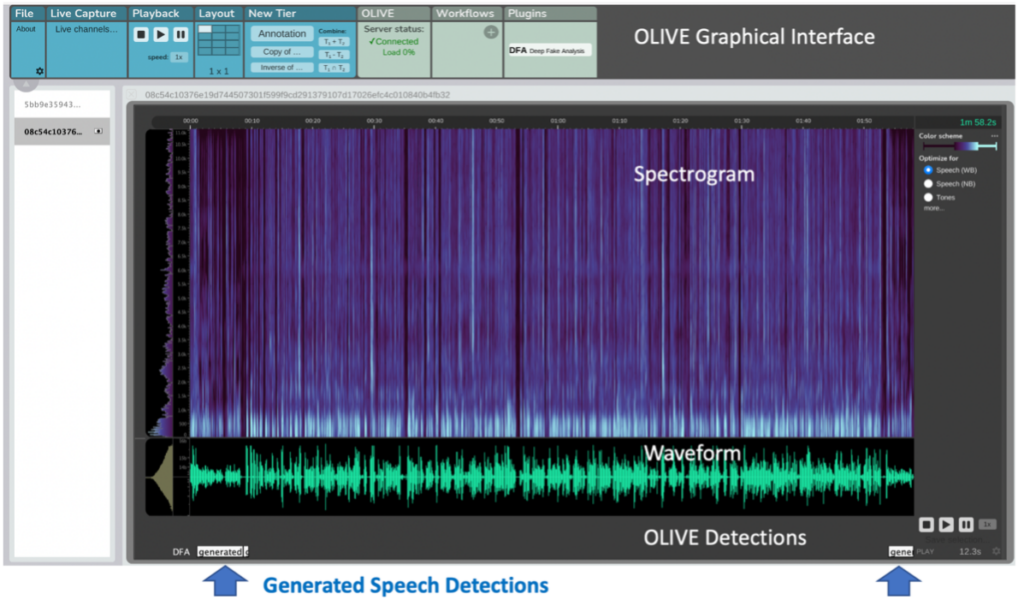

Graciarena shared that some of the current manipulation detection tools, developed under SemaFor, are being integrated in SRI’s OLIVE (Open Language Interface for Voice Exploitation) platform. In the figure below, a speech waveform has been manipulated such that some waveform segments, containing generated (synthesized) speech, were inserted. This is one important misinformation case because those inserted segments with synthesized words, never uttered by the original speaker, may completely change the message from the original unaltered waveform.

The OLIVE Graphical User Interface (GUI) displays the spectrogram (spectral frequency information along time), the waveform (signal amplitude along time), and, at the bottom, the possible generation detections which are time aligned with the waveform and spectrogram. In this case, the OLIVE system correctly detected and temporally localized two different regions where speech was synthesized.

While its main objective is defeating intentional disinformation, the technologies developed under SemaFor aims at detecting things as subtle as the mis-attribution of a piece to a reputable source when it was produced by a less reputable or nefarious source.

The potential harm from synthetic media is considerable. Ill-intentioned parties have used emerging media tools to create everything from fabricated apartment rental ads and false online dating profiles to more nefarious uses like replacing dialog in videos to put words in the mouths of those who never said them. These bad actors have used disinformation to disrupt elections, erode faith in once-trusted institutions, discredit highly influential people, commit financial fraud, and more.

“We are in an arms race between the developers of false information, who benefit from media production tools that are growing stronger by the day, and those of us developing detectors to spot their manipulated media,” Graciarena said.

This research was developed with funding from the Defense Advanced Research Projects Agency (DARPA). The views, opinions and/or findings expressed are those of the author and should not be interpreted as representing the official views or policies of the Department of Defense or the U.S. Government.